The race for ever-more-powerful artificial intelligence models just got a major shakeup. On May 13, 2024, OpenAI unveiled GPT-4o, its newest flagship model. This isn’t just an incremental upgrade; GPT-4o promises significant improvements in speed, versatility, and overall capability.

What Makes GPT-4o Special?

One of the most exciting features of GPT-4o is its ability to handle multiple information formats. Unlike previous models that primarily focused on text, GPT-4o can understand and process information from images, videos, and even audio. This opens up a world of possibilities for more natural and interactive experiences. Imagine showing GPT-4o a picture of a dish on a restaurant menu and getting a detailed explanation of its ingredients, cultural background, and even recipe suggestions!

But GPT-4o isn’t just about versatility. It’s also incredibly fast. OpenAI boasts response times as low as 232 milliseconds for audio prompts, making interaction nearly indistinguishable from a real human conversation.

On top of these headline features, GPT-4o builds upon the strengths of its predecessor, GPT-4. Users can expect even better performance in text generation, comprehension, voice interaction, and image analysis.

Features of GPT-4o

Here’s what makes it special:

- Multimodal: Unlike previous models, GPT-4o can understand and process information across different formats – text, image, video, and audio. This allows for a more natural and versatile interaction.

- Speed: GPT-4o boasts significantly faster response times compared to its predecessors. It can react to audio prompts in as little as 232 milliseconds, nearing human response speed.

- Enhanced Capabilities: It builds upon the strengths of GPT-4, offering improved performance in text generation, understanding, voice interaction, and image analysis.

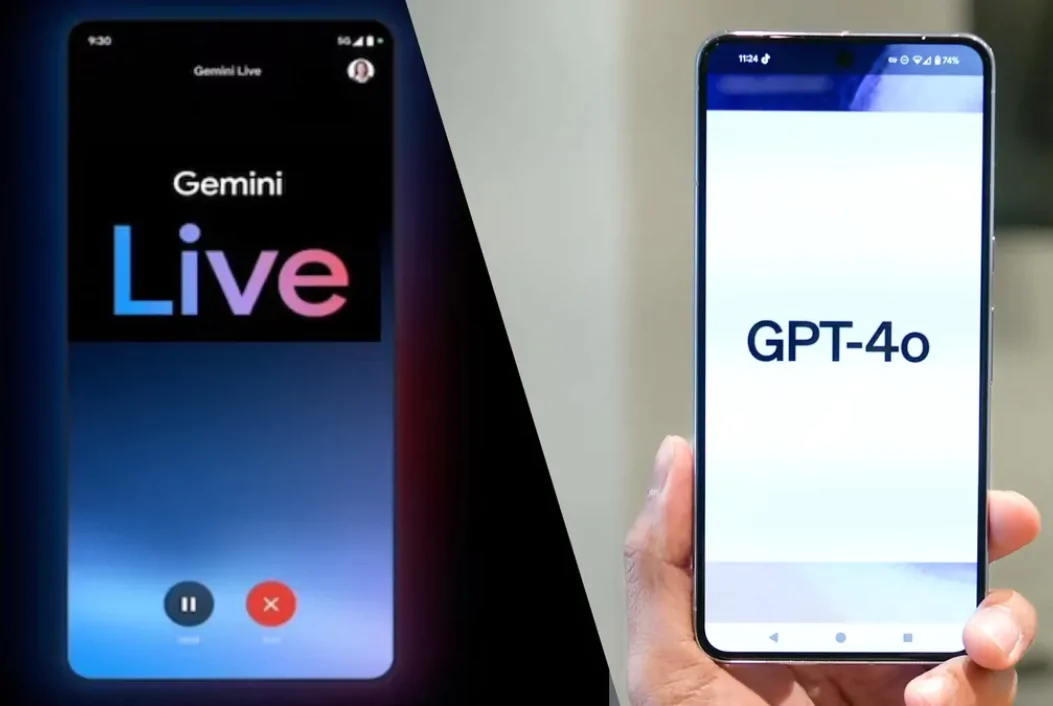

ChatGPT-4o vs Google Gemini Live

Google isn’t standing still after introducing GPT-4o. It recently unveiled Gemini Live, a multimodal AI assistant with remarkable voice and video capabilities. View our preview of GPT-4o vs. Gemini Live to see how these powerful AI assistants compare.

GPT-4o as an live translation tool

OpenAI showcased GPT-4o’s live voice translation feature, which has the potential to revolutionise the international travel industry.

ChatGPT operates similarly to human interpreters at international conferences, serving as a bridge between speakers of disparate languages.

OpenAI CTO Mira Murati spoke in Italian during the demo; ChatGPT translated her words into English and forwarded them to her colleague Mark. He then spoke in English, which ChatGPT translated into Italian and then returned to Murati with a dubious accent.

What Does This Mean for the Future?

The implications of GPT-4o are vast. It has the potential to revolutionize the way we interact with technology, opening doors for more intuitive search engines, intelligent assistants, and even educational tools that can cater to different learning styles.

OpenAI plans to gradually integrate GPT-4o into its popular ChatGPT platform. Free users will have limited access, while Plus, Team, and Enterprise users will enjoy increased capabilities and higher usage limits. Additionally, a new “Voice Mode” is planned for release soon, allowing for natural conversation via voice commands.

The Road Ahead

While GPT-4o represents a significant leap forward, it’s important to remember that AI development is an ongoing process. OpenAI is committed to responsible AI development and has emphasized the importance of safety and ethical considerations. As GPT-4o continues to roll out, it will be fascinating to see how it shapes the future of artificial intelligence and its impact on our daily lives.

ChatGPT-4o Cheat Sheet: Important Information

- Significant update for ChatGPT free users: OpenAI is making available a large number of features that were previously only available to subscribers. Access to data analytics, image and document analysis, and personalized GPT chatbots are all included in this.

- Why ChatGPT GPT-4o is significant: GPT-4o, which is multimodal by design, was completely rebuilt and retrained by OpenAI to comprehend non-textual input and output in addition to speech-to-speech.

- The steps to enter GPT-4o: If you have a Plus subscription, you can access GPT-4o right now. Over the next few weeks, access will be progressively extended to all ChatGPT users on desktop, mobile, and the web.

- GPT-4 vs. GPT-4o: Although GPT-4o may represent an entirely new class of AI model, it did not demonstrate superior performance over GPT-4 on benchmark text-based tasks. Live speech and video analysis, however, will prove to be its deciding factors. Moreover, it is more conversational.

- Top 5 newly added features to GPT-4o Impressive new features and functionality that were previously unattainable are included with GPT-4o. This includes multilingual live translation and conversational speech, though those features are not yet operational.

- Alerting Siri: “Siri looks downright primitive,” says Mark Spoonauer, global editor in chief of Tom’s Guide, of OpenAI’s GPT-4o and especially ChatGPT4.

FAQ

What is GPT-4o?

- GPT-4o, also known as Omni, is a cutting-edge large language model developed by OpenAI. It represents a significant leap forward in AI capabilities, offering several key improvements over previous models.

What are the key features of GPT-4o?

- Multimodal: Unlike earlier models that primarily focused on text, GPT-4o can understand and process information from various formats, including images, videos, and audio. This allows for more natural and versatile interactions.

- Speed: One of GPT-4o’s most impressive features is its lightning-fast response time. It can react to audio prompts in as little as 232 milliseconds, nearing human response speed.

- Enhanced Capabilities: GPT-4o builds upon the strengths of GPT-4, offering significant improvements in text generation, comprehension, voice interaction, and image analysis.

How will GPT-4o be used?

The applications of GPT-4o are vast and still being explored. Here are some potential uses:

- Search Engines: GPT-4o’s ability to understand different formats could lead to more natural and intuitive search experiences.

- Intelligent Assistants: Imagine assistants that can understand your requests across different media, like showing a picture and asking for a related recipe.

- Education: Personalized learning tools that adapt to different learning styles could be developed with GPT-4o’s capabilities.

Is GPT-4o available for everyone?

- OpenAI plans to integrate GPT-4o gradually into its ChatGPT platform. Free users will have limited access, while Plus, Team, and Enterprise users will enjoy increased capabilities and higher usage limits.

Will GPT-4o replace humans?

- GPT-4o is a powerful tool, but it’s not designed to replace humans. It can automate certain tasks and enhance our abilities, but human creativity, critical thinking, and ethical decision-making will remain crucial.

Is GPT-4o safe?

- OpenAI emphasizes responsible development and is committed to building safe and ethical AI. As with any powerful technology, how GPT-4o is used will be critical.

Where can I learn more about GPT-4o?

- OpenAI Blog: [OpenAI Blog on GPT-4o]

- Tom’s Guide article: [Tom’s Guide on GPT-4o]

Learn more about Latest AI Tools.

Really excellent information can be found on blog.Money from blog